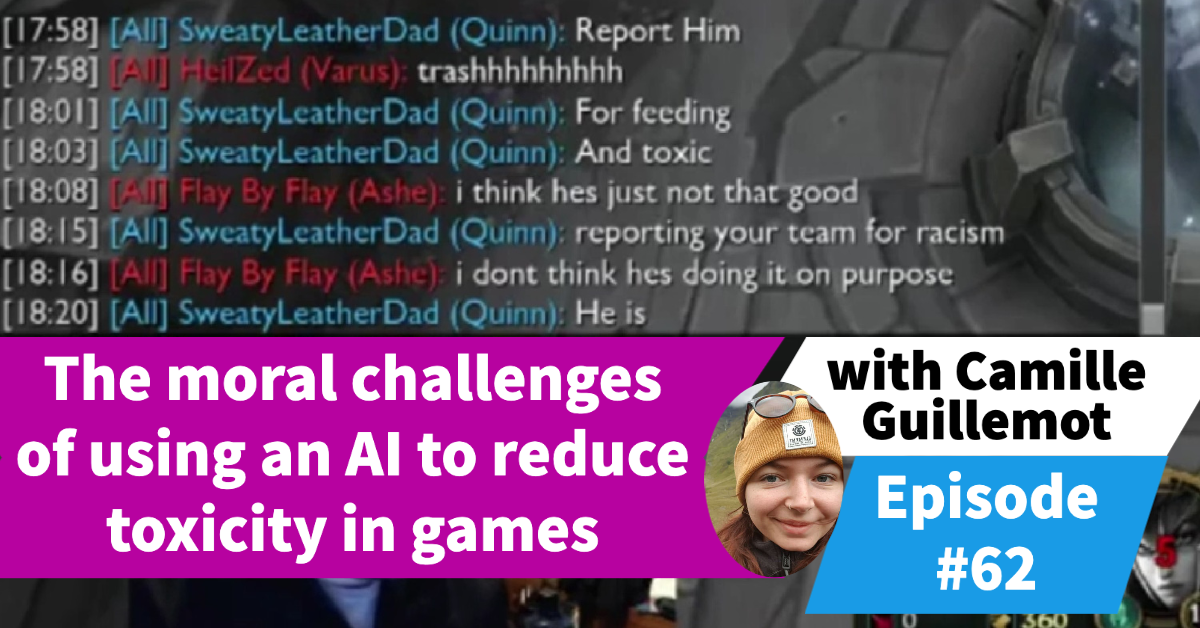

[Release Date: February 14, 2023] Toxicity in online gaming is an incredibly complex problem to solve. Teams of moderators often seem hopelessly outmatched by the amount of toxicity and it’s sometimes ambiguous nature. But, what if we brought an AI into the game to help us with both toxicity and fraud by bots, which are essentially other AI? In this episode we look at one company’s attempt to do just that.

SHOW TRANSCRIPT

00:02:36.880 –> 00:02:43.129

Shlomo Sher: All right, welcome, everybody we’re here with a to me. Oh, you know K. Me! Let me stop again and ask you

28

00:02:43.350 –> 00:02:45.829

Shlomo Sher: your last name.

29

00:02:45.990 –> 00:02:49.990

camille: yeah. Oh, perfect right on point.

30

00:02:50.010 –> 00:02:51.230

Shlomo Sher: Okay.

31

00:02:51.280 –> 00:02:52.250

Shlomo Sher: cool.

32

00:02:52.410 –> 00:02:56.749

Shlomo Sher: It’s been a while since I got one right. All right. That was perfect.

33

00:02:57.340 –> 00:03:15.080

Shlomo Sher: all right. All right, everybody. We’re here with Kamigu Moe Kami started her career fighting fraud by making gaming a safer space for gamers. But you quickly realized that fraud was only one part of the puzzle, and decided to gain yourself more on topics, that matter to her and join bodyguard as it’s gaming partnership manager

34

00:03:15.090 –> 00:03:29.200

camille: where she’s now, dedicating your energy to making, gaming a safer and better place for all players, developers and content creators can me welcome to the show. Yeah, thank you for having me. Thank you so much. It’s really happy to be here

35

00:03:29.860 –> 00:03:49.299

Shlomo Sher: all right. So I I told you before before we start today. to me. you’re from a company called Bodyguard, and you’re the first person we’ve actually had from a company, and we hope to do more. But, we’ve generally try to stay away from that. But we think what what you’re doing is so interesting.

36

00:03:49.310 –> 00:04:07.439

Shlomo Sher: and the way that you yourself are thinking about it so interesting. We really want to have this conversation with you, so let me jump right into it. let’s say my studio has a game out, and I’ve been told that some people that our players ability to communicate with each other really freely has led to a toxic environment.

37

00:04:07.450 –> 00:04:12.570

Shlomo Sher: Right? how can a company like yours help me deal with the toxicity that occurs in my game?

38

00:04:13.580 –> 00:04:37.459

camille: So in in in the world of online gaming toxicity. It includes, a lot of of different things. Actually so sexual harassment, hate, speech, Threats of violets doxing. also, it can also be, you know grooming spamming, flaming. It’s it’s it’s a lot of actually a lot of of different different things.

39

00:04:37.470 –> 00:04:42.030

camille: And what we do at bodyguard is that we

40

00:04:42.190 –> 00:05:02.160

camille: we go get actors from the gaming industry. So publishers developers esports, players, dreamers, and we try to build with them environment that are safer and inclusive using moderation. So today we know that you know

41

00:05:02.170 –> 00:05:11.450

camille: gaming is is more about, you know, connecting with each other about communities, interactions, and sometimes it led to, You know.

42

00:05:11.460 –> 00:05:37.799

camille: very toxic environment, where people are insulting each other, like, you know, being violent to each other verbally and all that kind of situation that you guys are probably aware of. And what we do is that we try to build a moderation that fits the spirit of their games that fits the the the environment and the type of connection they want to make with their community, to create a a safe and inclusive environment for for them and for their players.

43

00:05:37.810 –> 00:05:55.660

Shlomo Sher: So you’re building. So you said, we’re trying to build a moderation. All right, so explain what it. What does that mean in your context? So in the context is that today we have developed a technology that is doing a moderation work. So it’s a technology that is capable of

44

00:05:55.790 –> 00:06:05.540

camille: finding, analyzing and moderating content in real time. So this is the technology that we developed.

45

00:06:05.670 –> 00:06:20.099

camille: But the thing is today, with gaming like you. You know you’re not going to moderate an Fps shooter the same way. You are going to moderate a monopoly plus or just dance game. It’s

46

00:06:20.110 –> 00:06:31.140

camille: 2 different worlds to different player base. So what we do, what I mean by building a moderation with them is that we use the technology that we have. We use our expertise, our knowledge

47

00:06:31.150 –> 00:06:49.840

camille: on moderation, and we try to find the best way to use that technology for them to build a safe environment. So the technology is very, you know, very easy to modulate and to customize, it has. It’s Rule base. So you know, it’s like really easy to tailor to

48

00:06:49.850 –> 00:07:02.350

camille: someone’s need. And what we do is we use our technology that is already high performing to make sure that it’s like you know it. It fits the spirit of their game, and what cut, what type of moderation they want for their games.

49

00:07:02.460 –> 00:07:05.439

Right? So I think I think it’s important to just

50

00:07:05.550 –> 00:07:07.509

to step back a little bit and say.

51

00:07:08.830 –> 00:07:11.930

Prior, most moderation is done by humans.

52

00:07:12.120 –> 00:07:20.529

camille: Yes, people are actually just on monitoring what’s going on, in the, in, the chat, or listening in, and what’s happening, and then and then applying their

53

00:07:21.030 –> 00:07:34.809

camille: their own sense of what’s what’s right and wrong, and and and doing what they, and having a tools that they they can to either mute people or ban people, or do all these things right. So what you’re talking about is actually an AI,

54

00:07:35.150 –> 00:07:44.840

or you know it’s it’s it replaces the human moderator with somebody who is with a with a computer which can do things faster and more efficiently.

55

00:07:45.470 –> 00:07:53.190

Shlomo Sher: and and maybe more rule based, which is interesting, because, you know, in part of our previous conversations on this topic.

56

00:07:53.250 –> 00:08:10.399

Shlomo Sher: you know we always try to get to principles right? And when you have a bunch of individuals, you know, and people operate a lot of time really on a case by case basis. It’s, you know. But if you have an AI moderating and moderating conversation.

57

00:08:10.680 –> 00:08:25.160

Shlomo Sher: so, as it happens, which is, you know to me pretty incredible. yeah, right? when that happens right, you need those those kinds of principles, and we want to talk to you. So partly about that. But

58

00:08:25.170 –> 00:08:31.609

Shlomo Sher: before we do that, let’s talk about the whole challenge of controlling. Actually sorry before I do that, hey, Andy.

59

00:08:32.000 –> 00:08:37.950

Shlomo Sher: Can you just make sure that you’re suddenly your your volume on your

60

00:08:38.090 –> 00:08:49.290

Shlomo Sher: We Mike went down. Just make sure that your is everything. Okay, yeah, Everything’s fine. Everything’s fine. Okay, me good on. It looks good on my recording. So okay that never mind. Okay. So let me let me go back to what?

61

00:08:49.310 –> 00:08:51.380

Shlomo Sher: but before we do.

62

00:08:51.390 –> 00:09:16.990

Shlomo Sher: and before we get into any of that want to know about the problem of toxicity itself, you know, right now I mean companies hire moderators, so human moderators. that you know your a My! I may replace right and they’re they’re they’re to do something right, and that’s their job is very hard to do. Why is it so hard to control toxicity and game? That’s both moral and satisfying to the players?

63

00:09:17.550 –> 00:09:30.379

camille: For for so many reasons? Actually because first, when when the games were designed. It was designed, you know, to connect people, but it was not designed taking into account

64

00:09:30.600 –> 00:09:47.570

camille: toxicity and moderation. You know, it was just about living like a a chat box open for everyone, so everyone can connect. It was not, you know, taking into consideration. Okay. But what if someone you know loses, and there is a so a lot loser like

65

00:09:47.740 –> 00:09:55.099

camille: you know it. All of these considerations were not taken into into consideration. Actually, so

66

00:09:55.110 –> 00:10:13.140

camille: today it’s just like, you know, about creating gaming experience like to unify everyone. And and usually these problems are very like much aside, so we tend to see like a big changes in that. But but today it’s it’s still happening.

67

00:10:13.150 –> 00:10:32.359

camille: there is also, as you said, a lack of scalability. because these are humans beyond the moderation it’s very difficult to moderate everyone. Can you imagine, Can you imagine how many games of league of legends are played per hour. It’s like massive. You cannot have like humans behind

68

00:10:32.370 –> 00:10:33.780

camille: all of you know.

69

00:10:34.220 –> 00:10:45.939

camille: all of the of the of the games like trying to moderate. So there is like this problem of of scalability, because we are so many players in the world. So this is something that is that is missing. There is also the

70

00:10:45.950 –> 00:11:03.759

camille: the the question of centralization, you know. in in big publishers. Hierarchy. What is happening also is that you have studios that, you know. Handle their project on their own like very separately, and there is like no coherence when it comes to

71

00:11:03.820 –> 00:11:18.490

camille: community guideline player base guidelines. we see today creation of teams like diversity and inclusion player safety teams. But they are very recent, like, I think maybe 5 years ago. None of them

72

00:11:18.790 –> 00:11:30.120

camille: existed before, like none of them like we’re trying to think about those problematics, you know. But it is it’s starting to change. But there is this difficulty of scalability. There is

73

00:11:30.210 –> 00:11:35.699

camille: the freedom also that, being behind the screen offers. There is, like, you know.

74

00:11:35.800 –> 00:11:42.009

camille: the this kind of I’m. I’m kind of hidden protected by my screen, and I will allow myself to.

75

00:11:42.090 –> 00:11:58.119

camille: you know, to say stuff that I wouldn’t. I wouldn’t dare say to someone’s face if the street. So there is that we anonymized by like we have, you know, Handles gate handles instead of using our real names. And so we’re anonymized in that way. There’s no repercussions.

76

00:11:58.750 –> 00:12:18.429

camille: Exactly so that’s you know. Like all of those like factors that contributed to the fact that today toxicity is very difficult to tackle. There is like a lot of ketchup that needs to be done also on the publishers and developers side, because they are still, you know, trying to figure out how to do that, like some of them just

77

00:12:18.440 –> 00:12:35.180

camille: don’t want like multiplayer features in their game, so that you know they are like, okay, I don’t want to deal with those kind of topics. So i’m just gonna not put multi-place features. so it’s it’s really difficult today to to control all of this. and and these because of all those factors.

78

00:12:35.470 –> 00:12:41.439

Shlomo Sher: you know, we we had an episode about toxicity, and our guests essentially called it a wicked problem.

79

00:12:41.670 –> 00:12:51.990

Shlomo Sher: You you remember. Remember, you know, a wicked problem in the sense of it’s one of the really, really really tough problems out in the world, you know. So you know

80

00:12:52.500 –> 00:12:53.890

Shlomo Sher: It’s

81

00:12:54.050 –> 00:12:56.310

Shlomo Sher: It’s a challenge that seems

82

00:12:57.390 –> 00:13:04.670

Shlomo Sher: just so incredibly tough to get to get right. but here you are coming in with an AI

83

00:13:04.680 –> 00:13:23.669

Shlomo Sher: right? And we’re very curious to see. Well, okay, I mean, what are the kind of relative advantages or disadvantages of trying to resolve this with an AI as opposed to human monitors. I think you’ve already come up with one which is just a scalability issue.

84

00:13:23.680 –> 00:13:33.189

camille: Yes, so so the beauty of bodyguard and I’m going to preach a bit for my own chat. But but the beauty with Buddy Garden, like what

85

00:13:33.240 –> 00:13:50.939

camille: Charles, the the person who created the technology. He’s been like really thinking about it. He wanted to take the best of both worlds like with Hanaman Tamasi. you know it’s about taking the AI the scalability being able to do in real time.

86

00:13:50.950 –> 00:14:08.700

camille: being able to moderate massive numbers today fast. It’s also about protecting mental health. You know of those team that do moderation work because I’ve seen a I’ve seen a documentary of people doing moderation for Facebook met up, and it’s absolutely

87

00:14:08.710 –> 00:14:25.260

camille: horrific like they are like seeing violent images, violent like a text every day, like even even for us. Sometimes like when I need to, you know. Go see the data that we have been like analyzing, and i’m seeing a lot of stuff

88

00:14:25.270 –> 00:14:39.880

camille: it’s really really hard, You know when I spend like an hour, maybe just reading comments, and I see a horrible, horrible stuff, so I cannot imagine what those people like was like experience every day 8 h a day doing this. so it’s it’s pretty like

89

00:14:39.890 –> 00:15:03.829

camille: also about preserving human’s eyes humans mental health from that. and also there is this idea that we are also fighting AI especially in the in the case of a fraud, because usually you have bots, you know, like floating like creating new ways of fishing people and all of that. So, having an AI fighting and other AI is also really interesting for us.

90

00:15:03.840 –> 00:15:04.730

camille: That’s

91

00:15:04.960 –> 00:15:19.420

A Ashcraft: wow. I never remotely thought about that. Yeah, as I fascinated it from a. From a sort of a William Gibson sort of point of view. Yeah, it’s out there that these these ais are are fighting each other, one to protect our mental health and the other to

92

00:15:19.430 –> 00:15:32.999

Shlomo Sher: I don’t know. Sell it stuff, probably. Yes, rob us, you know. Right? I mean, you know, trick us into into anything at all right? Exactly. So that’s exactly what we’ve been working on

93

00:15:33.010 –> 00:15:51.140

camille: with the one of the the editors that we’ve been working with is that they had a massive issue with bots, you know, creating gray markets trying to pull out the players out of the official ecosystem, you know, so they can buy in game currency elsewhere. So they had, like massive issue with that.

94

00:15:51.150 –> 00:15:58.629

camille: because first they were spamming. They were also ruining the economy of the game. So we had to think about. Okay, how can we, You know.

95

00:15:58.640 –> 00:16:21.769

camille: counter that using, using our technology. So it was about learning how they were behaving what? Where you know the patterns, and then creating something that will counter that. So? Yes, it’s it’s exactly that’s just creating AI against AI. But, as I said, there is also the human side that we cannot, you know, ignore it. You cannot moderate humans just by using

96

00:16:21.780 –> 00:16:37.729

camille: the machines. it’s. It’s something that you know needs to be fueled by humans, because at the end MoD moderating and toxicity. It’s something very subjective. It can be something really subjective. So you need to make sure that you have humans understanding what’s going on.

97

00:16:37.740 –> 00:16:45.440

camille: So with the humans. So, as I said, it’s human. It’s based rules, and those rules are created by our linguists.

98

00:16:45.470 –> 00:17:04.609

camille: and what they do is that they, you know, try to find what are the new trends of toxicity, because they, because with their human brains and their human emotions, they can understand the subtlety, and in in in toxic sense, you know. Sometimes you have very toxic the content that doesn’t content any

99

00:17:04.970 –> 00:17:08.229

camille: swear words, and not even a little

100

00:17:08.760 –> 00:17:18.079

camille: fuck. Sorry you’re you’re you’re you’re you’re you’re allowed to use it. Okay, I I don’t, because you know, when you are in the moderation business You are just

101

00:17:18.160 –> 00:17:35.390

camille: start to you know. Get Really.

102

00:17:35.400 –> 00:17:40.630

camille: this is, you know, this is toxic and I think it’s it’s quite beautiful, you know, because it’s

103

00:17:41.020 –> 00:17:53.599

camille: you know, in in sci-fi! It’s the whole deal like when in a When do we consider an AI as it’s diligent as as a human, because they don’t understand emotions. But we we’re trying to

104

00:17:53.610 –> 00:18:12.750

camille: to teach them as well as emotions like. What would a a human feel if they read that sentence? and and that’s you know. That’s that’s the part the human part of the technology that we do. Also, very that we do cherish, because we do feel it’s really important to keep a human side to the moderation that we perform.

105

00:18:12.960 –> 00:18:16.919

A Ashcraft: Yeah, because there’s a lot of nuance between, you know.

106

00:18:17.350 –> 00:18:30.269

camille: you using using the F word to call some, you know, to as a as in some sort of slur, or something like that, and just going off when I’ve lost a game. Exactly.

107

00:18:30.280 –> 00:18:43.670

camille: So context context is something that we, if we really work on it’s really important with bodyguard. And and that’s why I I i’m, i’m emphasizing it, we are a contextual moderation solution. We don’t work on keywords.

108

00:18:43.680 –> 00:18:56.559

camille: because, as you mentioned like fuck in the sentence, can mean like so many things you need to understand the full context. So we we, we work on the context. And you know, another example of that is like

109

00:18:56.570 –> 00:19:06.359

camille: something really specific for gaming like. Very recently we had like a lot of misogyny, I mean, i’m not saying it’s recent. It has always been there, but we are being more vocal about it.

110

00:19:06.410 –> 00:19:26.230

camille: and you know it’s like Go make new sandwich that a lot of people are using against streamer female streamer to say you. You have no space in here, so in in essence go make me a sandwich, and they I wouldn’t understand that but us as human, because we have all the context and everything. We do understand that

111

00:19:26.390 –> 00:19:39.399

camille: this shouldn’t be said to her to a streamer live. So is this, and and the beauty with the rule base that we can actually say to the AI: okay. So go make me a sandwich in this context should be moderated.

112

00:19:39.450 –> 00:19:42.450

Shlomo Sher: So so so just to be clear

113

00:19:42.930 –> 00:20:01.859

Shlomo Sher: as i’m as i’m trying to wrap my head around this. Originally I thought this, you know. So you have an AI and the AI also does learning as AI’s learning. I’m. Assuming from from the context, or is it just getting so an AI can look at a space and essentially get feedback from us from the space and

114

00:20:01.870 –> 00:20:10.359

Shlomo Sher: develop kind of its own, algorithm based on on that feedback or the AI can be fed with rules from from the very beginning.

115

00:20:10.380 –> 00:20:23.830

Shlomo Sher: and continuous rules is your account is yours, and and the the former ones the ones with the AI. Just learn from the space has famously led to a bunch of ais that were sexist or racist or

116

00:20:23.910 –> 00:20:27.240

Shlomo Sher: so Exactly. Is that what you guys are trying to fix here.

117

00:20:27.850 –> 00:20:43.750

camille: So we are trying to fix, but by not using what we would call machine learning, because, like my machine learning is exactly that. You know, the AI is learning by itself with the database that it’s allowed to access. We don’t want that, because

118

00:20:43.890 –> 00:21:01.330

camille: again, like machine learning is, is highly inefficient for for moderation to our opinion. And again, you have, like so many new trends like today, like people can write words without, you know the full letters. They find new ways, you know, adding commas between the letters, or like so many creative ways.

119

00:21:01.340 –> 00:21:19.190

camille: So using machine learning is for moderation is not is not efficient, and that’s not what we’re using. What we use is our linguists and the feed the AI with roles. So saying, this, plus this plus this and this context shouldn’t be shouldn’t be allowed.

120

00:21:19.200 –> 00:21:30.999

camille: That’s why it’s like so easy to customize for different. And you know people we’re working with. Because obviously, if you say to someone on, I don’t know like

121

00:21:31.200 –> 00:21:47.609

camille: something under a picture of kids. And you say, oh, i’m, i’m gonna murder them. It’s very different to someone saying under a video game post, you know, of a shooter saying, oh, i’m, i’m gonna murder them because it’s like so so different, like

122

00:21:47.620 –> 00:21:55.679

camille: context is very different, or I’m going to murder you is not the same thing in a shooter in game chats that i’m going to murder you in

123

00:21:56.330 –> 00:22:05.409

Shlomo Sher: in adjustments, you know. But, by the way, that so? So to get some clarity are we only talking about text-based communication or also voice.

124

00:22:05.420 –> 00:22:18.600

camille: Yes, at the moment we are. We are focusing on on texts. Oh, Audio audio is something that we think about, but at the moment I think it’s it’s it’s too early. we already see

125

00:22:18.630 –> 00:22:29.459

camille: changes when it comes to moderation. Reason we are still not quite there. but I mean on the market side. I mean so maybe maybe in the future I hope

126

00:22:29.620 –> 00:22:45.430

camille: I mean it’s a huge jump from text to voice. I mean, it is. It’s a huge term also. Can you do this in real time, like right? You do by writing like you know so many questions, so many questions, and it also sounds like you have to, because you you mentioned that, you know, having a picture

127

00:22:45.480 –> 00:22:53.090

Shlomo Sher: in the text stream, and then and then the context of the picture.

128

00:22:53.340 –> 00:22:55.220

camille: So how crazy, difficult!

129

00:22:55.520 –> 00:23:07.990

camille: So the the way we are performing at the moment is that the rules are set per pages, that we are like pages or platforms that we are protecting. So, for example.

130

00:23:08.020 –> 00:23:12.790

camille: we don’t cross information between pictures and text yet.

131

00:23:12.870 –> 00:23:25.310

camille: but what we do is that. Oh, we know that this page, that this sweeter account that we are protecting or aiming for like a a young audience. So we know that those kind of like

132

00:23:25.320 –> 00:23:37.660

camille: sentence should it be found in this? You know this. So that’s how we but one day, maybe maybe I hope that we’ll be able to cross as many information as possible.

133

00:23:38.020 –> 00:23:45.510

Shlomo Sher: Right? I mean, yeah, this is the scalability of this. Yeah, I there’s just so much data involved here in real time.

134

00:23:45.540 –> 00:23:59.160

Shlomo Sher: you know, the other part, and I want to get obviously to the actual thoughts about moderation and all that. And the principles you guys are using, and all that. But one, a bit of clarity. you, said linguist, which is interesting, right? Because

135

00:23:59.170 –> 00:24:06.050

Shlomo Sher: they’re obviously studying the language, and you’re also dealing with multiple languages at the same time.

136

00:24:06.060 –> 00:24:23.769

Shlomo Sher: How is that? And mind you, I don’t know how that’s done by humans, either right, because you know, you could be in a text chat where people will chat in like Arabic and mandarin and Spanish and English in one stream, and I’ve never understood how a human being could.

137

00:24:23.780 –> 00:24:30.060

Shlomo Sher: I mean, you know, could know so many languages, and be able to effectively moderate something like this.

138

00:24:30.350 –> 00:24:43.439

camille: Well, it’s it’s a lot of work. So at the moment we have 6 premium languages that we cover with our 6 different 7 different languages. So we have English. We have both

139

00:24:43.620 –> 00:24:57.860

camille: English from the Uk and from the Us. Because culturally very different type of toxicity and and English. We also have Portuguese, Spanish, German.

140

00:24:57.870 –> 00:25:04.529

camille: French. So these are the 6 premium languages that we covered today. and these are like the highest performing

141

00:25:04.570 –> 00:25:09.850

camille: at the moment, but for the other languages it’s a it’s another challenge, because

142

00:25:09.900 –> 00:25:11.580

camille: first of all, I mean.

143

00:25:11.960 –> 00:25:21.729

camille: we cannot hire a linguist from all those languages to cover. Not yet, at least like we are not like that a big company that can hire a language from every language.

144

00:25:21.780 –> 00:25:31.009

camille: But but we are working on you know, being able to moderate also all these different languages by using a translation module

145

00:25:31.100 –> 00:25:35.180

camille: that will then pass the content through

146

00:25:35.270 –> 00:25:37.929

camille: for the technology bodyguard. And then

147

00:25:38.310 –> 00:25:42.020

camille: by doing this, it’s like. Of course, we will not

148

00:25:42.140 –> 00:25:46.730

camille: moderate Arabic languages the same way with the same

149

00:25:46.770 –> 00:25:56.050

camille: quality as we do with with English at the moment, because we don’t have the linguists, but our AIM is to be able to protect as many people

150

00:25:56.070 –> 00:26:08.080

camille: as possible, so it’s already a first step, and and it’s already what’s been what’s what is done on the market today in terms of of of moderation, anyway. But for for today. What we want to do is to

151

00:26:08.190 –> 00:26:25.099

camille: protect as many people as we can. so it means that we will have to adjust. We will have to work really hard to make sure that those languages are protected at the best standards as possible. but that’s that’s how it’s it’s working at the at the moment. But again, you know, it’s like

152

00:26:25.460 –> 00:26:27.110

camille: so so

153

00:26:27.190 –> 00:26:29.420

camille: many, so much data to

154

00:26:29.460 –> 00:26:50.550

Shlomo Sher: to analyze into so much work, so much what to do

155

00:26:50.560 –> 00:27:07.630

Shlomo Sher: you know? And British English, which is not even going to Australia yet? Right? and if we’re talking about Arabic, I mean, you could have, you know, one set of norms in Morocco and a very different one in you know, Lebanon. Right

156

00:27:07.640 –> 00:27:20.170

Shlomo Sher: that’s a lot of expertise needed whether you’re doing it as humans or as AI

157

00:27:20.180 –> 00:27:28.109

camille: sub languages, you know, like a very specific way to talk this language in certain regions, it’s it’s getting really really difficult.

158

00:27:29.210 –> 00:27:30.680

Shlomo Sher: Okay, okay.

159

00:27:30.750 –> 00:27:49.259

Shlomo Sher: Now, now, let’s jump to actually the creative, the the creative decisions I don’t know. But yeah, the decisions that are actually made. So You know, you guys are a a moderation software, essentially a moderation team. but you know what some people call moderation is. Other people just call censorship.

160

00:27:49.370 –> 00:27:54.260

Shlomo Sher: So is moderation. Censorship. Is this a limitation of free speech?

161

00:27:55.880 –> 00:28:06.060

camille: It is. It is not because moderation it’s not the way I see it, or the way we see it, bodyguard, because first

162

00:28:06.190 –> 00:28:17.509

camille: you choose to to, to, to moderate like the the platform that you know that call us for help. They choose to to moderate. There is also, the

163

00:28:17.740 –> 00:28:34.330

camille: the application that you can download. People choose to have moderation on their own page. So this is not censorship. It’s self protection, self preservation. there is also something that we say quite often, and and I will repeat it again and again.

164

00:28:34.680 –> 00:28:44.419

camille: your the the freedom of some people stop where the the suffering of others begin, which means, you know, Sometimes you have

165

00:28:44.590 –> 00:28:52.830

camille: loud people that allow themselves to be very toxic to one another and others that because they are so afraid of.

166

00:28:53.000 –> 00:28:58.670

camille: you know, receiving those insults, receiving those toxic content, they decide to

167

00:28:58.840 –> 00:29:13.040

camille: actually sh themselves, and not to participate to conversation, and not to enjoy the game like I’m. I’m one of these person like to be to be honest as a as a woman in in gaming. Sometimes I I

168

00:29:13.320 –> 00:29:17.090

camille: I I almost never use the voice chat.

169

00:29:17.270 –> 00:29:27.629

camille: because I know I know that’s i’m gonna get targeted, or you know i’m gonna get insults. So you know, I I actually censor myself

170

00:29:27.740 –> 00:29:46.560

camille: because i’m fearing toxicity. So what we want to do. And and there is, something that that we really work really hard with ready guard is that we want to give the voice voice to everyone. We want to make sure that everyone on platforms on social media is allow themselves to

171

00:29:46.570 –> 00:29:54.550

camille: participate to conversation, that they enjoy the the the experience as much as anyone will do.

172

00:29:54.560 –> 00:30:07.009

camille: and also we do not moderate criticism. It’s not something that we do. We very much like. Put a lot of effort, and when we start working with clients. We are very like.

173

00:30:07.020 –> 00:30:21.370

camille: very clear with them, like criticism will not be moderated. Toxic content. Violent content will be moderated to protect your teams, to protect, to protect you. But criticism will will, will not. So it’s really important.

174

00:30:21.380 –> 00:30:27.759

camille: to see moderation as actually a tool of freedom, you know, to allow everyone to

175

00:30:28.160 –> 00:30:36.220

camille: to express themselves, to participate to conversation, not to be afraid to be out there, because, you know the minorities today they are like

176

00:30:36.500 –> 00:30:52.980

camille: victims of toxicity. I’ve seen like a very recent like study again, like the numbers are terrifying. we need to give everyone a a voice, and that that contributes also to like, you know, diversity on social media.

177

00:30:52.990 –> 00:31:03.059

camille: on on, on those platforms. So to me and to to bodyguard, we see it as a tool for freedom more than more than censorship.

178

00:31:03.340 –> 00:31:07.529

A Ashcraft: Yeah, I’d like a Your first point, I think, is really interesting that you

179

00:31:09.360 –> 00:31:12.070

The things that that get posted to my page

180

00:31:12.780 –> 00:31:19.620

A Ashcraft: whether that I post them or somebody else posts them, are ultimately my responsibility. And so in some ways they are my speech.

181

00:31:19.670 –> 00:31:23.620

A Ashcraft: So even if Shlomo posts something to my page, and I don’t like it, I can delete it.

182

00:31:23.640 –> 00:31:29.090

camille: and that’s not censorship, because it’s my page, and people are gonna see that as as my owner. Yes.

183

00:31:29.290 –> 00:31:30.040

A Ashcraft: right

184

00:31:30.330 –> 00:31:36.339

Shlomo Sher: let coming so to pick it back on that just to just if I got the technology. Right?

185

00:31:36.410 –> 00:31:40.320

Shlomo Sher: did you say that this is is this more of a client

186

00:31:40.360 –> 00:31:50.780

Shlomo Sher: based, or the client turns it on. So that, rather than moderate the speech in the entire conversation. This is something I can

187

00:31:50.820 –> 00:32:10.710

Shlomo Sher: decide to, turn on, or turn off for myself in of in terms of protecting myself, rather than moderating the entire conversation. That does that make sense that each person can decide whether to engage with it or not?

188

00:32:10.720 –> 00:32:15.669

camille: so there is like 2 2 sites. So there is like the

189

00:32:15.850 –> 00:32:25.000

camille: the the app that is now available that anyone can use, and it’s just you know about moderating, and then don’t see content. But for clients they actually choose what they want

190

00:32:25.020 –> 00:32:25.850

camille: to

191

00:32:26.120 –> 00:32:36.979

camille: appear on their on their page. So I just want to make one thing clear that we we are always moderate a content that is,

192

00:32:38.660 –> 00:32:49.290

camille: against the law, like racism, homophobia. All those Kenya you know, of of content that are like against the load. They will always always be moderated like.

193

00:32:49.410 –> 00:33:00.109

camille: No, the client would say, oh, I wanna you know the n word to to stay on the page. It’s a big No, no, we we have, like the Those things are not against the law in the United States.

194

00:33:00.410 –> 00:33:05.969

camille: They are. They are in Europe. So in in Europe we decide. I mean

195

00:33:06.200 –> 00:33:08.310

camille: we are. You know we are very

196

00:33:08.780 –> 00:33:21.240

camille: engage with what we do like. We we we do believe that those behaviors shouldn’t happen. So that’s that’s that’s where. Also, like the all headache, part of it. It comes to, you know.

197

00:33:21.250 –> 00:33:34.610

camille: to the game is that we make strong choices at bodyguard to always, always moderate those kind of content. They will always be moderated for the other parts, like, you know, very specific

198

00:33:34.620 –> 00:33:45.069

camille: topic. Then they can decide what they want to do with the content. If they want you to be permissive with their moderation. They can, if they want to be very strict with their moderation, they can.

199

00:33:45.190 –> 00:33:49.109

Yeah, I think I think Slomo is thinking that, like I

200

00:33:49.600 –> 00:33:54.290

he could download it and use it to protect himself from

201

00:33:54.390 –> 00:34:10.379

Shlomo Sher: stuff on Facebook. Yes, or like on some on a website or no, I either, or something like that I I meant something. No, I meant some when i’m in client based, I meant in the technical sense, so in the sense that the user is using client software.

202

00:34:10.389 –> 00:34:14.970

Shlomo Sher: So let’s say, i’m playing a game, and I have the option on my end

203

00:34:14.989 –> 00:34:33.119

Shlomo Sher: to have the moderation on or off, as opposed to let’s say a plain link of legends, and you know I, you know I have the ability to turn bodyguard on for myself as opposed to so that you’re not hearing or seeing. I should say you’re not seeing

204

00:34:33.130 –> 00:34:42.390

Shlomo Sher: right, so it’s moderating the speech that I would be seeing as opposed to everybody’s speech. Do you see what I mean to me, and Andy, that that’s where I thought you were going

205

00:34:42.460 –> 00:34:49.049

A Ashcraft: with what you were talking about, my page right. I was thinking like I am. I am league of legends.

206

00:34:49.179 –> 00:34:52.699

Shlomo Sher: and so I I hire bodyguard

207

00:34:53.400 –> 00:34:56.249

to moderate the things that are

208

00:34:57.290 –> 00:35:00.949

Shlomo Sher: sure that are being posted onto legal legends.

209

00:35:01.260 –> 00:35:08.929

Shlomo Sher: I I see. But if if that was the case we would say, Well, league of Legends and censoring the conversation right? It’s it’s it’s owned by league of legends.

210

00:35:09.000 –> 00:35:28.739

Shlomo Sher: Right? Well, you you could say they can’t right. It’s because they can do what they want right. But you still might say there. whereas if it was a a client based kind of software, you might say it’s an option that someone who wants moderation can turn on

211

00:35:28.880 –> 00:35:30.139

Shlomo Sher: or off

212

00:35:30.240 –> 00:35:34.839

Shlomo Sher: right. So then it becomes an individual person, kind of situation.

213

00:35:34.950 –> 00:35:42.809

camille: So it’s it’s an individual case for the app that we are offering. But when we work with clients they are

214

00:35:42.950 –> 00:35:49.650

camille: the users, you know. If I, as a user I want to play League of legends, I cannot, you know.

215

00:35:49.670 –> 00:35:54.139

camille: Say, I want to do that. I don’t want body guard because you are under

216

00:35:54.250 –> 00:35:55.109

camille: the

217

00:35:56.520 –> 00:36:07.240

camille: community guidelines that has been set to by riots in that case, and if Ryad says we moderate all those type of content, then you know

218

00:36:07.420 –> 00:36:11.479

camille: it’s, it’s it’s it’s there. It’s their choice.

219

00:36:11.500 –> 00:36:23.860

camille: but in in any case the the look like the the big part of the work that we’re doing with them is actually to find, you know, the the right, the right balance the right balance with them, because obviously we don’t want to

220

00:36:24.430 –> 00:36:43.389

camille: fall into censorship because it’s really easy to follow through censorship with with the with with moderation. it’s a it’s a lot of work with them before we integrate the solution to make sure that it’s right. Balance that it’s not, you know, moderating what shouldn’t be moderated.

221

00:36:43.400 –> 00:36:58.750

camille: but at least for a homophobia and a racism. It it! It should be gone, and I don’t think it it should be right by by by anyone. But these are like the minimum. I’d say there is like a minimum of of things that we would always moderate anyway.

222

00:36:59.290 –> 00:37:07.899

Shlomo Sher: okay. So now let’s get to the the the big challenges, then. and the big challenges is the challenge of moderation itself

223

00:37:08.010 –> 00:37:12.120

Shlomo Sher: right and and i’m wondering here if there is. So

224

00:37:12.710 –> 00:37:20.540

Shlomo Sher: if you have human moderators right, i’m assuming, and and I don’t know how this goes. This works. So this is me guessing and

225

00:37:20.550 –> 00:37:48.389

Shlomo Sher: and Andy to me. you know you guys can correct me on this because i’m coming at it from i’m just a gamer right? I mean, I I just play games right. I don’t really know what this works. I i’m i’m assuming. Let’s say a company like riot would traditionally have a moderation team, and they would essentially have meetings and decide for the individual moderators what it is that they should be looking for, and how it is that they should deal with those things. And then you have a

226

00:37:48.400 –> 00:37:55.560

Shlomo Sher: a policy of also what happens right? So one is. you know.

227

00:37:56.000 –> 00:38:01.450

Shlomo Sher: are they going to actually interrupt? I don’t know how human moderators can do this in real time

228

00:38:01.480 –> 00:38:07.619

Shlomo Sher: right. It’s really, you know, in real time. It’s very, very difficult.

229

00:38:07.750 –> 00:38:11.420

camille: I’ve I’ve worked for a big publisher for 40 years when I was a

230

00:38:11.440 –> 00:38:17.079

camille: working anti fraud, and what I’ve seen is that it’s it’s not what the rated at all.

231

00:38:17.240 –> 00:38:23.039

camille: What is happening is that it’s exactly. It’s not moderated. It’s

232

00:38:23.270 –> 00:38:41.660

camille: it’s kept analyzed afterwards. And then we take sanctions, and then, if the player is not happy with that he can complain, and then a human is checking on what has been happening, and then the human takes a decision. So it’s actually.

233

00:38:41.670 –> 00:39:00.769

camille: I think, to my opinion, not the right way to do it, because for I mean for so many reasons. First of all, you you don’t moderate in real time. So someone shoots an insult. You have read it. It’s too late, like the damages are done. You’ve read it. You feel bad. You want to quit the game so it’s ready back for your players retention

234

00:39:00.820 –> 00:39:09.810

camille: second, you you, If If the the the player who has been toxic is is banned like 2 days afterwards, like

235

00:39:09.830 –> 00:39:28.560

camille: what’s the point like? It’s too late. He probably doesn’t even remember the fuck off that he just sent like 2 days before. So there is that, and then they are allowed to complain, which I think it’s fair like they. They have the right, you know, to disagree with the decision. But then you have a human moderator that checks.

236

00:39:28.570 –> 00:39:44.029

camille: that reads on the chat logs and makes a decision according to his subjective view, without being without participating to to the game, and then makes a decision and say, okay, maybe we should lift the ban or remove the ban.

237

00:39:44.040 –> 00:39:57.440

camille: so I I do think it’s. It’s it’s not a good experience for the players, for the toxic players, for the motor rates for the publishers like so so so many reasons, I think we need to stop.

238

00:39:57.670 –> 00:40:04.089

camille: I think that’s why real time moderation is so important, and you know, because if we can prevent all of this

239

00:40:04.360 –> 00:40:05.129

to

240

00:40:05.510 –> 00:40:20.260

camille: to happen, you know, just by hiding the comment, like just by hiding the insults First, you don’t have people leaving the game because they have been insulted? You, Don’t. Have you don’t? Have people living the game because they have been banned? You don’t have to ask moderators to read.

241

00:40:20.270 –> 00:40:31.190

camille: toxic content to tank on the company time, you know, just to read and to make a decision based on their subjective and and I think there’s one. There’s one thing left out that

242

00:40:31.200 –> 00:40:44.310

Shlomo Sher: that you mentioned earlier that I’ve never thought of before, which is the whole BoT situation and fishing schemes. You know this is the kind of thing that definitely human moderation after the fact is not going to be able to do anything about

243

00:40:44.520 –> 00:40:51.389

camille: it’s it’s it’s not possible. It’s not scalable for for moderators. You You are not going to catch

244

00:40:51.620 –> 00:41:00.759

camille: everyone by by doing this right. This whole conversation is making me think about these Facebook groups that i’m in, that all have human moderators

245

00:41:00.880 –> 00:41:04.220

and one of the interesting things. There’s one group

246

00:41:04.620 –> 00:41:13.169

and I’m in a variety of different groups in a variety of different topics, right? And there’s one group that for some reason the moderators are constantly fighting with

247

00:41:13.430 –> 00:41:15.550

the the the group.

248

00:41:15.740 –> 00:41:21.319

Shlomo Sher: Yeah, but that and it’s I feel like it’s become a game in and of itself.

249

00:41:21.950 –> 00:41:31.829

camille: Okay, right. This group is very large. It’s a fan base for for a popular popular thing. And this and it’s just attracting people who are like. Oh, these moderators will

250

00:41:31.930 –> 00:41:32.569

I

251

00:41:32.890 –> 00:41:38.279

Shlomo Sher: are under are under threat. Yeah, the moderators feel like they’re under siege, right?

252

00:41:38.440 –> 00:41:44.759

Shlomo Sher: And this is a voluntary position for or is this.

253

00:41:44.850 –> 00:41:51.299

Shlomo Sher: you know, official moderators right? No, this is just the the people who put the put the page up

254

00:41:51.490 –> 00:41:53.910

are moderate, trying to moderate the page

255

00:41:54.420 –> 00:41:59.620

it feels like, and it’s the only group in the whole, and all the groups that I’ve been, and it feels like that.

256

00:41:59.860 –> 00:42:04.930

And I wonder if you see this? Do people start to try to fight the moderation?

257

00:42:05.480 –> 00:42:13.000

Shlomo Sher: Yeah, or or let me to to pig it back on that right now. Let’s say you you got an AI, that’s working in real time.

258

00:42:13.210 –> 00:42:28.229

Shlomo Sher: right? Can I? You know. Do you see people trying to like? Oh, look at that! I I censored me. Let me try to go around that AI. At least I at least that AI is not going to feel battered like Andy’s Moderator.

259

00:42:28.470 –> 00:42:42.339

camille: That’s you know that’s the but it becomes a game now, right? That’s that’s the thing like. I think it’s quite funny to see them try to be dai like that’s what makes it challenging, you know, because they actually also giving us

260

00:42:42.350 –> 00:43:00.009

camille: new keys of understanding what toxicity means are like. Oh, what’s going to be their next move? I think that’s why. Because we all with this job, you know. I think it’s so so interesting. but it’s also. The worst part of the job is that it’s it’s a cat and math situation. It’s always going to be like

261

00:43:00.020 –> 00:43:04.700

camille: with with with fraud, especially like they always gonna have like, you know.

262

00:43:04.790 –> 00:43:21.330

camille: one move. They’re always going to be one. Move ahead of you just to counter the AI, but it’s quite. It’s quite interesting, and that’s that’s where we need to act fast, and that’s where that’s why Also, it’s moderation is so difficult, because you know, it’s not one technology that will fits

263

00:43:21.340 –> 00:43:40.959

camille: that will fix everything. It’s not just one type of moderation that will fix everything that that’s why we always, you know, update the technology, keep up with the people we are working on, making sure. You know, we don’t see new trends because they are new trends like it’s it’s the thing that I I mentioned earlier. You see people

264

00:43:41.090 –> 00:44:06.869

camille: creating letters with symbols, you know, for example, the n word you have. You know, the bar and the v, and that creates an, and then they are able to write the full words. You know they are like already, you, you know, pretty creative people. and that’s that’s where we always need to to be on it, with everything to keep up with the with everything, but they will all. Obviously they will try to counter the AI, and it’s it’s what’s

265

00:44:06.960 –> 00:44:11.140

camille: makes it quite a quite a quite interesting, but also very challenging.

266

00:44:13.440 –> 00:44:15.410

Shlomo Sher: Okay,

267

00:44:15.500 –> 00:44:21.019

Shlomo Sher: Speaking of that challenge, all right. So a lot of this is.

268

00:44:21.550 –> 00:44:26.389

Shlomo Sher: Besides, how fascinating! Just all of this is. you know.

269

00:44:26.740 –> 00:44:37.090

Shlomo Sher: for more controversial stuff, I mean, you know, people a lot of times really disagree. And what is offensive? What is racist? What is sex is what is homophobic? Right? What is true?

270

00:44:37.290 –> 00:44:43.290

Shlomo Sher: All right, just true or not true right? you know, just to

271

00:44:43.440 –> 00:44:57.329

Shlomo Sher: you know that. you know there is no let’s say trans is only you know, men and women, and these are fixed. these are fixed positions, male female, right?

272

00:44:57.470 –> 00:45:06.999

Shlomo Sher: that is a position that is taken by some people to be transphobic or to reject a non-binary existence.

273

00:45:07.040 –> 00:45:21.880

Shlomo Sher: or in or even intersects. other people will say No, that’s just true about the world, right? And there’s lots of things like this that some people might say is racist. Some people might say sexes. Other people say No, that’s just true. When

274

00:45:21.930 –> 00:45:40.320

Shlomo Sher: you have human moderators right? You said this is subjective, so they give their they look at it they subjectively be analyzed instead. You guys have linguistic experts. Is that where you put it? Okay, so what is that? What does that mean? And how did they deal with the fact that people sometimes disagree? And

275

00:45:40.330 –> 00:45:56.659

Shlomo Sher: And what recourse is there to say? Look! I was talking about something real and true. It’s funny, because in the context of a game which is very different than in the context of Twitter and I think that matters right. It’s matters if you’re talking, and what’s maybe

276

00:45:57.110 –> 00:46:08.000

Shlomo Sher: the kind of freedom maybe you need to talk about in a place like Facebook or Twitter versus in the middle of a game might be very different. But still, what? What? How your experts handle things like that!

277

00:46:08.420 –> 00:46:18.770

camille: There is a lot of fights and heated conversations. No, actually it’s it’s diversity and inclusions. These are my

278

00:46:19.220 –> 00:46:22.119

camille: i’m going to say to because i’m not going to count. And

279

00:46:22.170 –> 00:46:37.039

camille: the 2 favorite world favorite world words in the world. They are so important. We need to make sure it’s it’s a it’s a big challenge within bodyguards. Is it’s true, like it’s and we don’t always have. The right answer is how we make sure that

280

00:46:37.090 –> 00:46:43.549

camille: our technology fits the needs of today, and that we are, like, you know, protecting as many people

281

00:46:43.600 –> 00:46:49.339

camille: as possible. we try it. It comes first with, you know, recruiting

282

00:46:49.790 –> 00:46:55.620

camille: diverse people, making sure that we are not. We don’t have only, you know, white, female, or

283

00:46:56.080 –> 00:47:14.630

camille: white male in the team, making sure you know that we have conversation that we talk about things it’s it’s it’s really, really really important, because when Charles the creator of of the technology, created the technology, he, he was just alone in his room, you know, and and trying to think about.

284

00:47:14.650 –> 00:47:20.160

camille: What would people there to say behind the screen that they wouldn’t? They are saying, you know.

285

00:47:20.270 –> 00:47:23.720

camille: in front of other people. But you know he has only his

286

00:47:24.030 –> 00:47:32.940

camille: white male prison, you know, is just like seeing that through his own experience. so it’s it’s where it’s really.

287

00:47:33.120 –> 00:47:36.840

camille: and and I’m going to really stress on that really important to

288

00:47:37.100 –> 00:47:55.519

camille: have people surrounding you that have different experiences that have. You know they’re coming from different places that they do understand, like other people, people’s point of view to make sure that the technology is always like reflecting, reflecting that very recently one of our

289

00:47:56.030 –> 00:48:03.639

camille: experts submitted a test to us. It was just a massive survey of like a 100

290

00:48:03.700 –> 00:48:06.500

camille: sentences in French.

291

00:48:06.510 –> 00:48:24.709

camille: and he said, okay, so now you’re gonna do what a moderator would do. You’re gonna take a decision on those sentence, and we had to classify those by saying, oh, this is insulting. This is neutral, and this is supportive, and this they. It was the hardest, really the hardest thing I’ve ever done.

292

00:48:24.720 –> 00:48:30.009

camille: and my in my whole career at bodyguard, because, you know, it was just trying to put myself

293

00:48:30.250 –> 00:48:44.240

camille: out of my own experiences, because i’m i’m going to be more sensitive about. You know, certain topics, but not so much to others because of my experiences because of who I am, my identity. So it was really difficult to put. You know

294

00:48:44.600 –> 00:49:03.150

camille: my myself out out of my own body and experience. And to try to answer this, the more objective is possible. It was impossible, and you cannot do it best. Just one person clicking on. Say, i’m gonna remove. I’m gonna i’m gonna say Yes, it has to be a collective work. We are not.

295

00:49:03.160 –> 00:49:21.530

camille: but, you guys, you’re very aware that we are not the police. We are not the justice. We are just here to try and empower people with our technology empower them with our knowledge of of of moderation. And what toxicity means trying to put the the technology in the right hands with the the right guidelines.

296

00:49:21.540 –> 00:49:26.589

camille: and it’s it’s it’s really tough, like Honestly, it’s it’s a work of every day

297

00:49:26.920 –> 00:49:32.729

camille: questioning yourself questioning what you’re doing. Making sure that you’re always during the

298

00:49:32.990 –> 00:49:34.899

camille: what you think is the right thing.

299

00:49:35.230 –> 00:49:40.029

All right. So let me let me ask a question. This comes from my white guide.

300

00:49:40.310 –> 00:49:43.219

My, my my 50 year old white guy hat. Right?

301

00:49:43.450 –> 00:49:44.350

So

302

00:49:44.460 –> 00:49:46.609

A Ashcraft: I have typed something

303

00:49:46.660 –> 00:49:47.830

into a chat

304

00:49:47.970 –> 00:49:49.450

that I think is fine.

305

00:49:49.760 –> 00:49:52.750

but the

306

00:49:53.100 –> 00:49:57.740

but it turns out somebody that I I can’t imagine is going to find it offensive.

307

00:49:59.140 –> 00:50:01.159

what happens to me?

308

00:50:03.680 –> 00:50:07.839

camille: What happens to you is, it’s it’s another.

309

00:50:08.260 –> 00:50:17.640

camille: It’s it’s really it’s really up to first of the people who decided to put the moderation on what they want to. To do with you?

310

00:50:17.710 –> 00:50:26.220

camille: To me Banning is not the right answer. Silencing is not the right answer, because again, I think there is degree in

311

00:50:26.240 –> 00:50:30.279

camille: in in what you you would say in toxicity. This is something that we

312

00:50:30.310 –> 00:50:31.700

camille: we do.

313

00:50:31.750 –> 00:50:39.560

camille: But we do care about. You know the severity, because again saying, you know something really offensive and and threatening. Someone to death is is

314

00:50:39.640 –> 00:50:41.789

camille: is is really different.

315

00:50:41.900 –> 00:50:46.909

camille: but that’s the first. Just my my comment itself just never gets

316

00:50:47.000 –> 00:50:53.510

camille: never gets published right? It’s never gets published, and you don’t know it first thing right? And I don’t know. Okay, and you don’t know

317

00:50:54.000 –> 00:50:55.470

camille: in the

318

00:50:55.590 –> 00:51:00.879

camille: in in an ideal world to me. I would like it. I would. I would like to see

319

00:51:01.020 –> 00:51:13.449

camille: other steps behind this. Maybe you know explanation, or you know, just informative as to why your common wouldn’t appear like okay. So this is

320

00:51:13.460 –> 00:51:25.790

camille: not going to appear. Because, according to I don’t know, maybe this law, or you know this context, you you shouldn’t be using it, you know, to start like informing people, because a lot of

321

00:51:26.280 –> 00:51:39.699

camille: a lot of like toxic common comes from a a desire to hurt other people, not like understanding what those words mean out of ignorance. and I don’t want people to be toxic

322

00:51:39.710 –> 00:51:50.900

camille: at all. but I think being toxic by by ignorance, because you haven’t, you know, tried to put yourself in other people’s shoes, is is to me probably one of the

323

00:51:50.970 –> 00:51:53.249

camille: of of the war thing like

324

00:51:53.490 –> 00:52:04.569

camille: let’s fight. Ignorance is, I think, one of the thing that I would like to see you know having like extra steps after after muting the comment.

325

00:52:04.650 –> 00:52:15.970

Shlomo Sher: You, you know this is where the things I like about reddit reddit moderators are usually very clear about what who you’ve broken, and they tell you, you know these are the rules, and you broke rule number 2.

326

00:52:15.980 –> 00:52:35.550

Shlomo Sher: But even then I mean a lot of times, you know, you could potentially have back and forth, you know I didn’t break a rule number 2. What it? How exactly is it but to apply? And maybe this is a sort of thing where, oh, my God! As a moderator, it’s just. It would drive me crazy if I had to have these arguments with people. But right. But at least that AI could, hopefully.

327

00:52:35.830 –> 00:52:51.610

Shlomo Sher: because it takes almost no work, Give you a bunch of details right there. As to the thinking that that led you there. At least that’s a possibility. If the company decides to provide them with that kind of feedback, and it seems only fair to provide you with the kind of feedback you’re talking about.

328

00:52:51.850 –> 00:53:04.880

camille: Yeah, that’s that was one of the thing that I was talking to my manager last time was, you know, the the question of the dig picks so I don’t know how it how it is in the Us. But in France now it’s we can

329

00:53:05.130 –> 00:53:16.549

Shlomo Sher: get sued for such a thing. You can, you know, pay fine for sending Dick picks. Yeah, if you’re like, if you’re like, if you’re like, if you’re like.

330

00:53:16.820 –> 00:53:28.839

Shlomo Sher: No. But yeah it it that’s really interesting in the Us. I mean, you know. yeah, I’ve never heard of any of any of anything like that. It would be protected under free speech, i’m assuming, unless you’re

331

00:53:29.050 –> 00:53:57.539

camille: well, I I guess I don’t know i’m not a lawyer. I’m gonna walk that one back. Well, well in France, it is. You can get fined for sending a dig pick to to someone, because it would be considered as sexual harassment. And it’s Condon by law in France. So you know, when someone is sending a dick big maybe not sending it like, maybe moderating it and say, oh, did you know actually that sending a date B can cost you like €3,000, because it is condemned by law.

332

00:53:57.550 –> 00:54:00.859

camille: and that I would like to see, because i’m pretty sure that

333

00:54:00.970 –> 00:54:19.399

camille: all these authors sending digits. It will quite change a lot of things the same thing, for you know, racism like saying, you know that you sending racist comments, can you cost you that much of money you can get condemned. We can go to prison, you know. I think that would change, because

334

00:54:19.430 –> 00:54:35.020

camille: I think even and and even more for the younger audiences that sometimes send those words because they see it. So they just, you know, mimic the the toxic behaviors, and they don’t realize how severe this can be, and I think that would you know

335

00:54:35.050 –> 00:54:51.079

camille: they like a cold shower. to to that. And and you know again trying to educate people. Let’s let’s educate people. Let’s not be punitive and just bad people. Let’s let’s educate people. I think I think it’s the best way to go to go forward with that.

336

00:54:51.480 –> 00:55:02.070

Shlomo Sher: It’s it’s interesting that, on the one hand and the one hand you’re you’re educating them on the rules, and how exactly they’re not meeting the rules. On the other hand, hopefully, you’re also educating them on

337

00:55:02.120 –> 00:55:03.410

Shlomo Sher: how to become.

338

00:55:03.460 –> 00:55:08.939

Shlomo Sher: You know better better people, decent decent human beings. That would be good

339

00:55:08.990 –> 00:55:28.539

Shlomo Sher: hopefully, but to but sorry. Go ahead. I I do understand why companies choose not to, though, because they don’t want to get into that conversation right? And and people are rules. Lawyers, right? They right? Yes, they will poke at the rules and poke at the rules and poke at the rules until

340

00:55:28.740 –> 00:55:31.170

camille: that’s that’s why.

341

00:55:31.720 –> 00:55:36.979

camille: that’s why it’s so difficult, you know, because you on our side, we have to educate

342

00:55:37.120 –> 00:55:48.310

camille: the the the people on the Internet. But we also have to educate the actors that have the power to make decisions, and and you know, to to make safe spaces, because they don’t

343

00:55:48.320 –> 00:56:18.299

camille: sometimes really realize what’s happening on their community, or they are like. Oh, you know, a bit of competitivity is good for the game. I’m like, Yes, okay. But maybe threatening. Some one of rape is not, you know, competitive. It’s just like something more so. And you know there is also. Yes, but we want to create a seamless experience for the players. You know we want competitivity. We want people to fight around the games, and i’m like, Yes, I do understand, but maybe maybe protect your players as well, because

344

00:56:18.310 –> 00:56:24.380

camille: it’s just, you know so much more important like, do not forget that people still

345

00:56:24.400 –> 00:56:46.189

camille: kill themselves because they are harassed online. So this is this is really something that we try to educate people about like we do understand. Gaming can be very competitive and like there is it’s it’s a business of its own. but let’s let’s be more human, maybe for 2,023 as well. try to You know.

346

00:56:46.430 –> 00:56:54.549

camille: protect the players, the people, the kids, the the young players that are behind the screens, is is something that would like to to see a bit more.

347

00:56:56.000 –> 00:57:07.940

Shlomo Sher: We’re right on it. Okay, then, then it it seems like a good place to to kind of wrap things up where it can be where I ask you our last question and all that.

348

00:57:07.980 –> 00:57:09.569

camille: Yes.

349

00:57:09.940 –> 00:57:24.410

Shlomo Sher: all right. do you want to take a do you want to take a minute to think about what you want to say to as a I’m going to ask you Our final question is going to be you know. What do you want to leave our audience with. And do you know, I think I think I’m ready. That’s fine. Okay, yes.

350

00:57:24.480 –> 00:57:28.669

Shlomo Sher: all right to me. we’re just about done. what do you want to leave our audiences with?

351

00:57:29.600 –> 00:57:44.399

camille: First of all, let’s make 2023 the the year of safety and inclusivity of diversity and inclusion. I’ve read a study very recently that 87% of the 1824 years old

352

00:57:44.410 –> 00:57:59.240

camille: felt like cyber violence in their life. Let’s make it to 0 in 2,023. You are not alone. People like us are trying our best to make gaming a better place. So so we here we got your back

353

00:57:59.780 –> 00:58:01.909

Shlomo Sher: all right. Sounds good.

354

00:58:02.010 –> 00:58:08.950

Shlomo Sher: Let’s do it in 2,023 alright. To me. Thank you for coming to the show Thank you so much for having me.

355

00:58:09.040 –> 00:58:10.339

Shlomo Sher: All right! Play nice everyone!